Add a new page:

This is an old revision of the document!

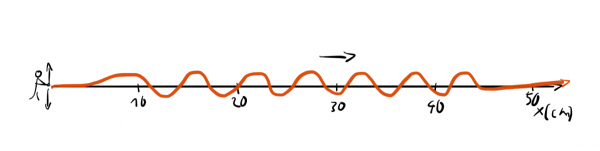

We can generate a wave in a long rope by shaking it rhythmically up and down.

Now, if someone asks us: "Where precisely is the wave?" we wouldn't have a good answer since the wave is spread out. In contrast, if we get asked: "What's the wavelength of the wave?" we could easily answer this question: "It's around 6cm".

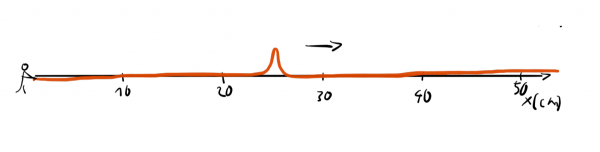

We can also generate a different kind of wave in a rope by jerking it only once.

This way we get a narrow bump that travels down the line. Now, we could easily answer the question: "Where precisely is the wave?" but we would have a hard time answer the question "What's the wavelength of the wave?" since the wave isn't periodic and it is completely unclear how we could assign a wavelength to it.

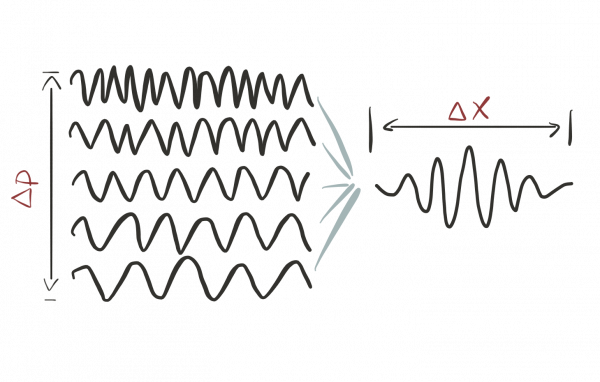

Similarly, we can generate any type of wave between these two edge cases. However, there is always a tradeoff. The more precise the position of the wave is, the less precise its wavelength becomes and vice versa.

This is true for any wave phenomena and since in quantum mechanics we describe particle using waves, it also applies here. In quantum mechanics, the wavelength is in a direct relationship to its momentum. The larger the momentum, the smaller the wavelength of the wave that describes the particle. A spread in wavelength, therefore, corresponds to a spread in momentum. As a result, we can derive an uncertainty relation that tells us:

The more precisely we determine the location of a particle, the less precisely we can determine its momentum and vice versa. The thing is that a localized wave bump can be thought of as a superposition of dozens of other waves with well-defined wave-lengths1):

In this sense, such a localized bump does not have one specific wavelength but is a superposition of many.

In this sense, such a localized bump does not have one specific wavelength but is a superposition of many.

Heisenberg sometimes explained the uncertainty principle as a problem of making measurements. His most well-known thought experiment involved photographing an electron. To take the picture, a scientist might bounce a light particle off the electron's surface. That would reveal its position, but it would also impart energy to the electron, causing it to move. Learning about the electron's position would create uncertainty in its velocity; and the act of measurement would produce the uncertainty needed to satisfy the principle.

Bohr, for his part, explained uncertainty by pointing out that answering certain questions necessitates not answering others. To measure position, we need a stationary measuring object, like a fixed photographic plate. This plate defines a fixed frame of reference. To measure velocity, by contrast, we need an apparatus that allows for some recoil, and hence moveable parts. This experiment requires a movable frame. Testing one therefore means not testing the other. https://opinionator.blogs.nytimes.com/2013/07/21/nothing-to-see-here-demoting-the-uncertainty-principle/

Whenever we measure an observable in quantum mechanics, we get a precise answer. However, if repeat our measurement on equally prepared systems, we do not always get exactly the same result. Instead, the results are spread around some central value.

While we can prepare our systems such that a repeated measurement always yields almost exactly the same value, there is a price we have to pay for that: the measurements of some other observable will be wildly scattered.

The most famous example is the position and momentum uncertainty: $$ \sigma_x \sigma_p \geq \hbar/2,$$ where $\hbar$ denotes the reduced Planck constant and the $\sigma_x$ means the standard variation if we perform multiple measurements of the position $x$ for equally prepared particles. Analogously, $\sigma_p$ denotes the standard variation if we measure the momentum $p$.

Hence, if we try to know the position $x$ very accurately, which means $\sigma_x \ll \hbar/2$, then our knowledge about the momentum becomes much worse: $\sigma_p \gg \hbar/2 $. This follows directly from the inequality $ \sigma_x \sigma_p \geq \hbar/2.$

We have already noted that a wave with a single sinusoidal or complex exponential component extends over all space and time, and that, if we wish to limit its extent, further sinusoidal components spanning a range of frequencies must, as in Section 13.3, be superposed. As with the Gaussian wavepacket of Sec- tions 14.4.1 and 16.5, the tighter the wavepacket is to be confined, the greater the range of frequencies needed. This reciprocal dependence of the frequency range upon the spatial or temporal extent is known as the bandwidth theorem, and is a crucial concept for the analysis of wavepackets.Chapter 17 in "Introduction to the Physics of Waves" by Tim Freegarde

The generalized uncertainty principle reads

\begin{equation} \sigma_A \sigma_B \geq \big | \frac{1}{2i} \langle [A,B] \rangle \big|^2 . \end{equation}

See also

—-

We have also argued that the rationale behind the Heisenberg indeterminacy principle (in the extreme cases in which one of the variables is sharply determined) can be understood in terms of the compatibility condition between the non-trivial identity of a state and the properties that can be consistently attributed to it. Indeed, an observable (such as q) that is not invariant under the automorphisms of a state (such as |jp〉) cannot define an “objective” property of the latter. Hence, the expectation value function will have a non-zero dispersion. We have argued that this dispersion measures the extent to which the transformations generated by q transform the state into a physically different state, i.e. the extent to which the non-rigidity of the state cannot “endure” the transformations generated by q.Klein-Weyl's program and the ontology of gauge and quantum systems by Gabriel Catren

Heisenberg sometimes explained the uncertainty principle as a problem of making measurements. His most well-known thought experiment involved photographing an electron. To take the picture, a scientist might bounce a light particle off the electron's surface. That would reveal its position, but it would also impart energy to the electron, causing it to move. Learning about the electron's position would create uncertainty in its velocity; and the act of measurement would produce the uncertainty needed to satisfy the principle.

Physics students are still taught this measurement-disturbance version of the uncertainty principle in introductory classes, but it turns out that it's not always true. Aephraim Steinberg of the University of Toronto in Canada and his team have performed measurements on photons (particles of light) and showed that the act of measuring can introduce less uncertainty than is required by Heisenberg’s principle. The total uncertainty of what can be known about the photon's properties, however, remains above Heisenberg's limit.

Contrary to what is often believed the Heisenberg inequalities (62) and the Robertson-Schrödinger inequalities (64) are not statements about the accuracy of our measurements; their derivation assumes perfect instruments (see the discussion in Peres,20 p. 93). Their meaning is that if the same preparation procedure is repeated a large number of times on an ensemble of systems and is followed by either a measurement of xj or a measurement of pj, then the results obtained will have standard deviations Dxj and Dpj. In addition, these measurements need not be uncorrelated; this is expressed by the statistical covariances Dðxj; pjÞ appearing in the inequalities (64).

http://webzoom.freewebs.com/cvdegosson/SymplecticEgg_AJP.pdf

An uncertainty relation such as (4.54) is not a statement about the accuracy of our measuring instruments. On the contrary, its derivation assumes the existence of perfect instruments (the experimental errors due to common laboratory hardware are usually much larger than these quantum uncertainties). The only correct interpretation of (4.54) is the following: If the same preparation procedure is repeated many times, and is followed either by a measurement of x, or by a measurement of p, the various results obtained for x and for p have standard deviations, ∆ x and ∆ p, whose product cannot be less than h/ 2. There never is any question here that a measurement of x “disturbs” the value of p and vice-versa, as sometimes claimed. These measurements are indeed incompatible, but they are performed on different particles (all of which were identically prepared) and therefore these measurements cannot disturb each other in any way. The uncertainty relation (4.54), or more generally (4.40), only reflects the intrinsic randomness of the outcomes of quantum tests.

page 93 in Quantum Theory: Concepts and Methods by Peres

According to the uncertainty principle, it is impossible to know several pairs of variables at the same time with arbitrary accuracy.

In some sense, it completely encapsulates what is different about quantum mechanics compared to classical mechanics.

A philosopher once said ‘It is necessary for the very existence of science that the same conditions always produce the same results’. Well, they don’t!

- Richard Feynman

–>What's the origin of the uncertainty?#

Quantum mechanics uses the generators of the corresponding symmetry as measurement operators. For instance, this has the consequence that a measurement of momentum is equivalent to the action of the translation generator. (Recall that invariance under translations leads us to conservation of momentum.) The translation generator moves our system a little bit and therefore the location is changed.Physics from Symmetry by J. Schwichtenberg

←-

[T]here exists also an explicit counterexample that demonstrates that it is possible in principle to measure energy with arbitrary accuracy during an arbitrarily short time-interval [9].

where Ref. [9] is Y. Aharonov and D. Bohm, “Time in quantum theory and the uncertainty relation for time and energy,” Phys. Rev. 122, 1649-1658 (1961)

A simple example is bandwidth in AM radio transmissions. A typical commercial AM station broadcasts in a band of frequency about 5000 cycles/s (5 kc) on either side of the carrier wave frequency. Thus $$∆ω = 2π∆\nu ≈ 3 \times 10^4 s^{-1} \tag{10.71}$$ and they cannot send signals that separate times less than a few $\times 10^{-5}$ seconds apart. This is good enough for talk and acceptable for some music.

A famous example of (10.62) comes from quantum mechanics. There is a completely analogous relation between the spatial spread of a wave packet, ∆x, and the spread of k values required to produce it, ∆k: $$∆x · ∆k ≥ 1/2.$$

For more information, see e.g. Leon Cohen, “Time-Frequency Distributions-A Review”, Proc. IEEE 77, 941 (1989)